I have the feeling that there is some subtle yet spread misconception about data-driven research in financial markets and I will take this article: Seeking Alpha – Not Even Wrong: Why Data-Mined Market Predictions Are Worse Than Useless by Justice Litle (also appearing in his website: Mercenary Trader) as a starting point for the discussion.

The article itself is born as a rant against this article on Yahoo Finance: Why Boring Is Bullish, which “infers” a 89% chance of bullish action on the S&P based on a sample of 18 previous cases where we had similar “low vol” as now.

Now, let me clearly say that the Yahoo article is indefensible for a number of reasons in my opinion (to mention a few: way too small sample size, no robustness analysis, no mention of numbers of trials that were run), so in this I agree with Mr Litle.

However Mr Litle goes beyond this and explains why equity markets cannot be “boring” right now:

“The potential trajectory of equity markets is DIRECTLY IMPACTED BY the trajectory of debt and currency markets (which are the OPPOSITE of boring now). […] “Calm before the storm boring,” maybe. Plain old boring boring? Ah, no.” […]

and eventually moves his critics to data mining in financial markets in general:

“Markets are far from simple. In fact they are very complex. As such, predictions based on data mining of a single historical variable or single cherry-picked pattern observation are almost always worse than useless because they ignore a core confluence of factors.” […]

“When it comes to predicting future outputs of complex systems, virtually ALL forms of single-variable statistical thinking are flawed.” […]

“The only way to avoid getting fooled by spurious data or superficial thinking is to put real elbow grease into truly understanding what drives markets and why…and once you have that understanding you don’t need to cherry pick or data mine because you have something better: The ability to assess a confluence of key factors in the present, as impacting important market relationships here and now.”

Now, while I agree that financial markets are very complex and that it’s very easy to be fooled, I believe that these statements about data mining are a tad too generic.

Using a single historical variable or taking into consideration the influence of multiple factors says absolutely nothing per se on how good a prediction is (and with “prediction” I refer to any kind of statistical inference over the future).

In general, to be able to make a prediction with some value one has to identify certain features (variables) that combined in a certain way have some predictive power over future events. This is true for any field and for any prediction method, be it AI or human reasoning.

The hard part of course is finding these features and combining them.

Looking at things this way, the author of the Yahoo article is just claiming that (a certain definition of) low-level of volatility has some explanatory power over future returns. What Mr Litle is responding is that monetary policy, debt and currency markets instead are better features to use, based on his experience and view of the world.

Is this really that different from properly done data mining?

The big question is whether “understanding” the causes of certain market dynamics is a key factor in making them forecastable to a certain degree (note the quotes in “understanding”).

I don’t believe this to be the case.

To make a parallel with the world of Physics, physicists certainly don’t always understand WHY certain things follow a certain law. Rather they observe a certain behaviour and they try to describe it. If along the way they can find some sort of explanation for it, the better. But there will always be an additional “why” which requires an answer (why apples fall towards the ground? -> gravity -> why gravity exist? -> relativity -> etc).

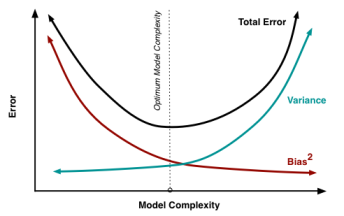

Of course a key difference with Physics is that financial markets cannot be entirely described by equations, being the results of complex interactions of billions of people. From a practical point of view this means that with a data-driven approach we have to put much more attention in developing a framework to evaluate the actual predictive power of any model, which also will hardly work “forever”.

But similar difficulties apply to any kind of discretionary trading. The very same fact that there are so many factors in play (and hence so much noise) makes it hard for our brain to analyze the situation objectively, and surely the many cognitive biases that affect us don’t help.

So our “understanding” of the causes of market movements can’t really go that far. E.g. we might understand that a certain inefficiency exists because of some institutions operating under some constraints, but we won’t know how long these constraints will stay in place or when some competitors will pick on this inefficiency reducing our profit margin or even causing the markets to behave in a totally unpredictable way.

With this I don’t want to say that using some discretion is pointless – rather I’m just trying to argue that there is a place for both in trading and I see no dualism here. Pure (properly done) data-driven research and pure macro/discretionary research lead to two different sets of opportunities that can also overlap in some situations.

Probably discretionary trading can be more responsive to changing market dynamics, whereas a data-driven approach might have his strength in the portability of the operations to different markets and in how quantifiable it is.

And in any case I strongly believe that any data-driven analysis is only as good as the thought we put into it, and likewise any type of discretionary trading can only benefit from making use of some quantitative analysis.

To comment on a last point brought up by Mr Litle:

“Yet we spend roughly zero time on data mining, with no interest in statements like “Over the past X years, the S&P did this X percent of the time.”

Why this contrast? Because markets are a complex sea of swirling and interlocking variables – and it is the historical drivers and qualitative cause-effect relationships are what have lasting value. It is not the output of a spreadsheet that matters – the pattern-based cherry picking lacking insight as to what created the results – but the qualitative relationships truly attributable to joint causation of various outcomes, on a case-by-case basis, with a very big nod to history and context.”

I agree that what matters is indeed finding some “relationships” that have real predictive power over the future. But how one finds these relationships is a complex matter and one has to dig in the specifics of each case to find out if the analysis has some value, because generally speaking the output of a spreadsheet can be as good or as bad as any qualitative relationships one may think to hold.

Andrea